Yet more memristor news from HP Labs. An eye-blink after their stunning news of discovering the memristor's existence as the fourth fundamental passive electronic circuit element, comes Nature Nanotech's advance online publication of significant progress in the practical fabrication of these devices.

Authors Yang, Pickett, Li, Ohlberg, Stewart and Williams--all of HP Labs in Palo Alto--state:

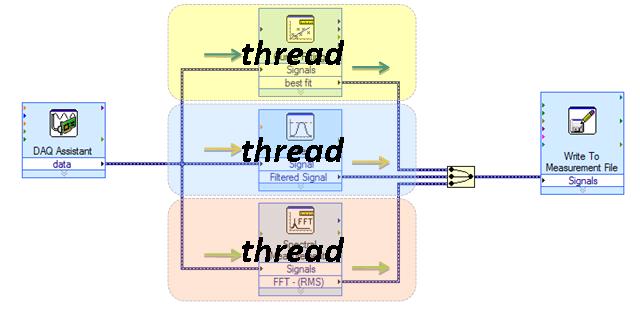

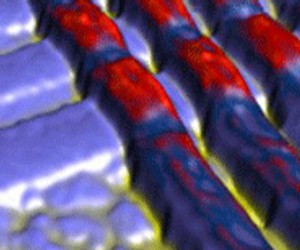

We have built micro- and nanoscale TiO2 junction devices with platinum electrodes that exhibit fast bipolar nonvolatile switching. We demonstrate that switching involves changes to the electronic barrier at the Pt/TiO2 interface due to the drift of positively charged oxygen vacancies under an applied electric field. Vacancy drift towards the interface creates conducting channels that shunt, or short-circuit, the electronic barrier to switch ON. The drift of vacancies away from the interface annilihilates such channels, recovering the electronic barrier to switch OFF. Using this model we have built TiO2 crosspoints with engineered oxygen vacancy profiles that predictively control the switching polarity and conductance.The keywords are "engineered oxygen vacancy profiles" and "predictively control." These indicate that memristors are hurtling from their emergence as a laboratory success-story to land square in the everyday IC design toolkit, right before our eyes. Remarkable!

More remarkable is the unusual behavior of these devices. First, there's no doubt that they'll be used as replacements for flash RAM and hard disks: they're faster, they use less energy, they would appear to be denser (50x50nm, in the versions discussed in Nature Nanotech), they operate in parallel, and scalability seems to be a real strength. Given the world's exponentiating appetite for information and information storage, this is all fine news for iPhones and laptops and other good things. But memristors' capability is not limited to storing 1's and 0's. That would be so 21st century. No, memristors can store values in-between. They are analog devices, and they will facilitate parallel analog computers. Those are common enough: you have one between your ears.

HP Labs' Jamie Beckett spoke with the researchers:

HP Labs' Jamie Beckett spoke with the researchers:"A conventional device has just 0 and 1 – two states – this can be 0.2 or 0.5 or 0.9," says Yang. That in-between quality is what gives the memristor its potential for brain-like information processing... Any learning a computer displays today is the result of software. What we're talking about is the computer itself – the hardware – being able to learn."

Beckett elaborates,

...[Such a computer] could gain pattern-matching abilities [or could] adapt its user interface based on how you use it. These same abilities make it ideal for such artificial intelligence applications as recognizing faces or understanding speech.

Another benefit would be that such an architecture could be inherently self-optimizing. Presented with a repetitive task, or one requiring parallel processing, such a computer could be designed to route subtasks internally in increasingly efficient ways or using increasingly efficient algorithms. Tired of PCs that seem slower after a few months' use than when they were new? This computer would do just the opposite.

Beckett continues:

"When John Von Neumann first proposed computing machines 60 years ago, he proposed they function the way the brain does," says Stewart. "That would have meant analog parallel computing, but it was impossible to build at that time. Instead, we got digital serial computers."Not science fiction. Science fact. And soon--sooner than any of us imagined--it will be up to the engineers and entrepreneurs to leverage this in fantastical ways, using memristors as routinely as they do transistors, invented just sixty years ago.

Now it may be possible to build large-scale analog parallel computing machines, he says. "The advantage of those machines is that intrinsically they can do more learning."